Historical returns are no guarantee of future returns. The money invested in the fund can both increase and decrease in value, and it is not certain that you will receive back the entire invested capital.

(The image above is meant to symbolize a future Nordic region where we have embraced artificial intelligence in the right way. The image was created using a photo of me and the AI tools Adobe Firefly and DreamStudio.AI. I then put it together in the image editing program Pixelmator Pro, which also utilizes various AI models to automate the workflow. See the full image here.)

The article is originally written in Swedish, available here.

I Replaced Myself with an AI

Now it writes code for me.

If someone hands you a hammer, is your first instinct to hit yourself in the forehead? Apparently, that's mine. With access to one of the world's most advanced LLMs (Large Language Models, a type of artificial intelligence) and a few hours of initial effort, I had a Frankenstein moment. I had created a monster that replaced me. It autonomously went through and reprogrammed my code.

Like all idiotic (and hopefully some ingenious) inventions, it started with sheer frustration. I had been working on a new feature in a framework I was unfamiliar with and that was quite advanced. It's fair to say I had bitten off more than I could chew. To my aid came the brilliant assistant from OpenAI, ChatGPT. Being an assistant is precisely what ChatGPT excels at. At first, I felt extremely foolish. It almost felt like I was cheating every time I submitted a piece of code along with an error message and asked it to help me figure out what had gone wrong.

Am I not better than this?

Do I really need to exploit AI to succeed in basic tasks? It felt like I was just throwing things at the wall to see what would stick. In the end, I swallowed my pride. It's not about how I deliver, but that I do deliver. To be completely honest – is it really that much worse to chat with an AI to solve a problem than to Google things, copy strange commands from StackOverflow, and paste them into your terminal? Until you manage to escape VIM (those who know, know).

After a few long nights and a weekend of an intense iterative process of throwing problems at ChatGPT and getting potential solutions back, I finally managed to make it work. Or so I thought. Ten minutes before the morning meeting where I planned to present my fantastic invention, everything crashed. At the last minute, with my tail between my legs, I had to return to my corner of shame consisting of me, ChatGPT, and the codebase I now hated.

This time, however, I looked extra carefully at the data my code had spat out and noticed that my values, which were supposed to be a percentage over time, consisted of not only zeros as I’d previously thought. At the end of the series of zeros, I found a bunch of 100% figures instead. Why was that? Why only zeros up to that point, and then suddenly 100%? A developer who hadn't spent the entire weekend in sheer frustration would quickly identify the problem: the percentage calculation is (probably) performed in the Integer data type, which doesn't have decimals. As a result, the outcome is either 1 or 0 and never anything in between (then multiplied by 100, of course). Armed with this new information, I brought out my interactive RubberDuck and briefly explained my theory.

"Yes, that could be correct. PostgreSQL (the database, ed. note) performs calculations in Integer instead of Float if you don't explicitly cast them to Floats. Here's an updated version of your code: […]” along with a complete, functioning code snippet that solved the problem. Like a bolt from the blue, I sat staring at the screen. Cool, you might think, but not revolutionary, you can Google that.

The thing is, I never actually told ChatGPT that I was working with the PostgreSQL database type specifically. It managed, all by itself, to make that connection based on the problem I had, without me having told it. Still not that revolutionary, you might think. That's when I realized all the dead ends I encountered in my interaction with ChatGPT throughout the weekend - I could have solved them directly if I had asked the right questions and given it more information.

But what does this have to do with your autonomous developer? Well, the difference between GPT-3.5 and GPT-4 on the surface isn't particularly significant. If you ask simpler questions or request simpler tasks, both models yield roughly the same results. But GPT-4 has an ability that GPT-3.5 can't handle: after your instructions, it can learn to use tools. This gives GPT-4 extreme power, which results in an exponential increase in its productivity, if used correctly.

Carl recently asked me how I felt about all the AI news coming out daily. I replied, without thinking, that I get sweaty. And that's true; so much is happening in such a short time that I can't even take in and process the information. One morning you wake up, and suddenly there are AI models, tools, and features that I, just a year ago, didn't think would exist in my lifetime.

When I then wanted to visualize the nice data points I had put together, I couldn't let go of the thought of giving GPT-4 its own tools and the fact that it actually solved all my problems for me, provided I gave it the necessary information.

Is it possible, then, you wonder?

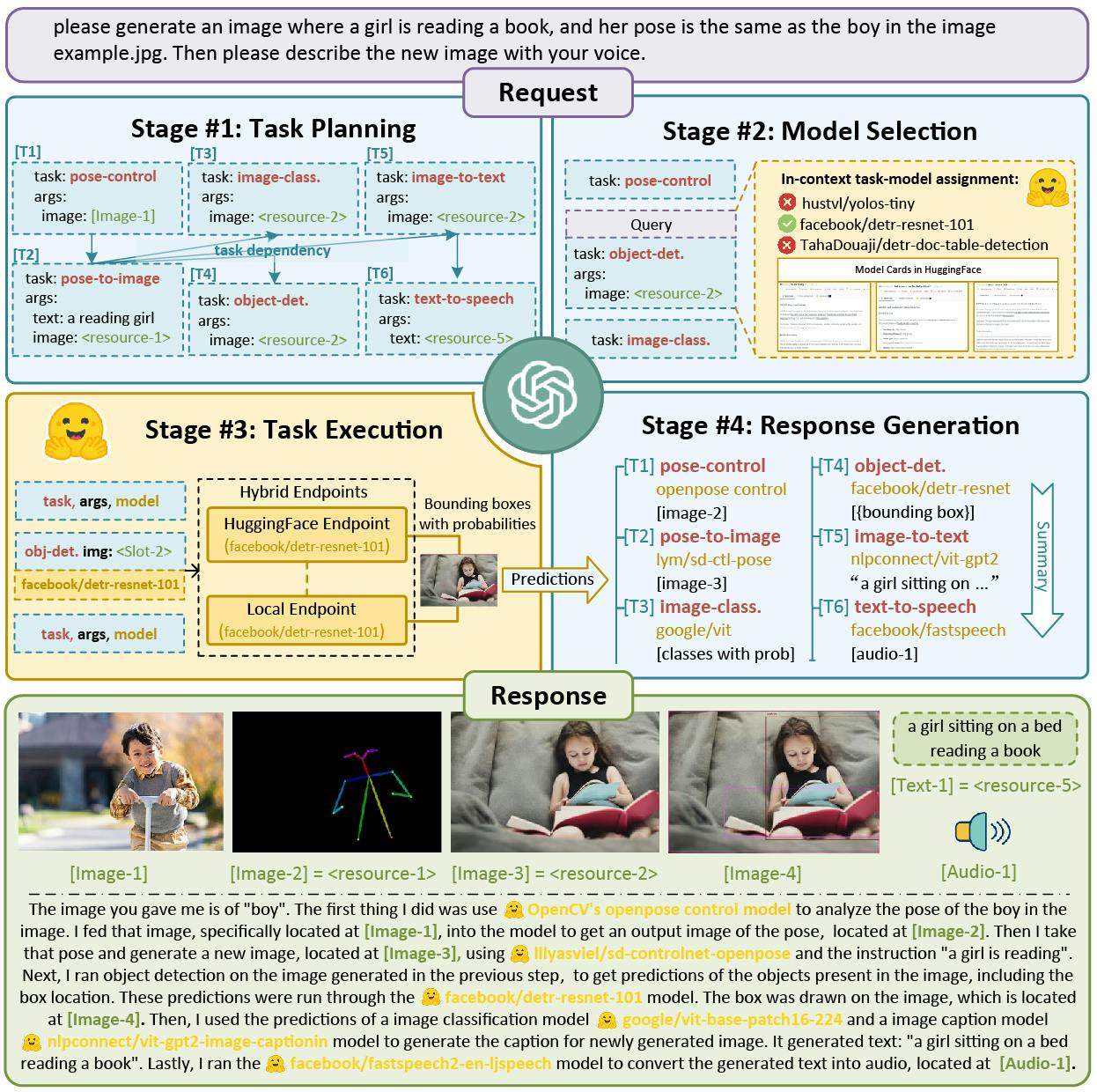

Yes, absolutely it is. In recent weeks, projects have emerged online with AI models based on GPT that can do simple planning and perform multi-step tasks. Microsoft JARVIS is probably one of the most impressive examples, based on research around HuggingGPT. It can combine different AI models based on a desired outcome. So, you can tell it what to do, and then it uses various AI models as tools to give you the result you want. It can also use more than just text; for example, you can give it a picture and ask it to create a new image resembling it and then describe in text what the image contains. Their own example below:

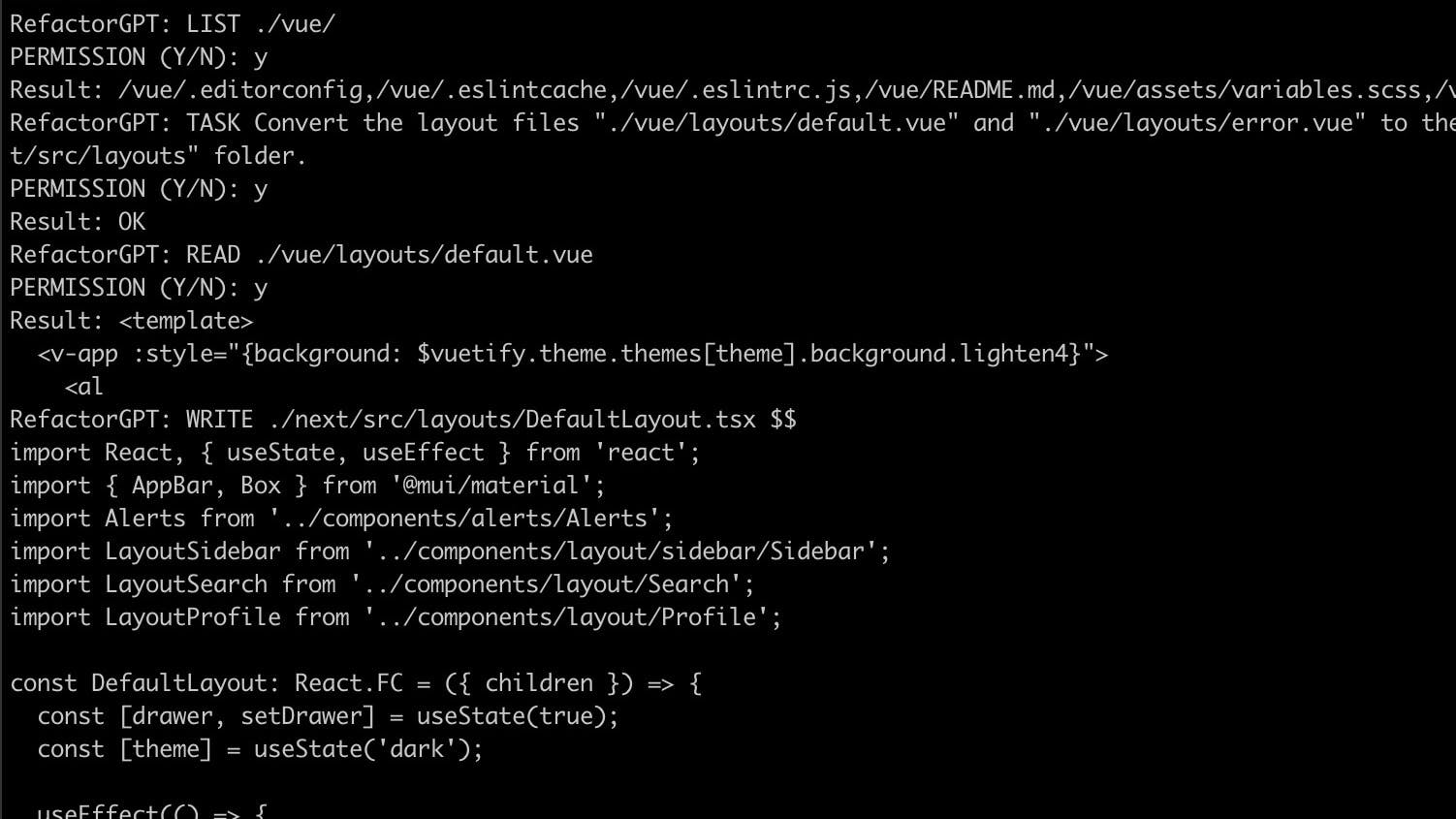

I rummaged through my email inbox and found, to my surprise, an invitation to GPT-4's API. This means I can programmatically communicate with GPT-4. Why on earth did I receive that? I can undoubtedly name at least ten other developers who would be more deserving of something like that. Anyway, a few hours later, I had written an instruction for it, given it tools to read and write to a file system, and asked it to define its tasks.'

Monday evening, sorry, no, Tuesday at 00:27

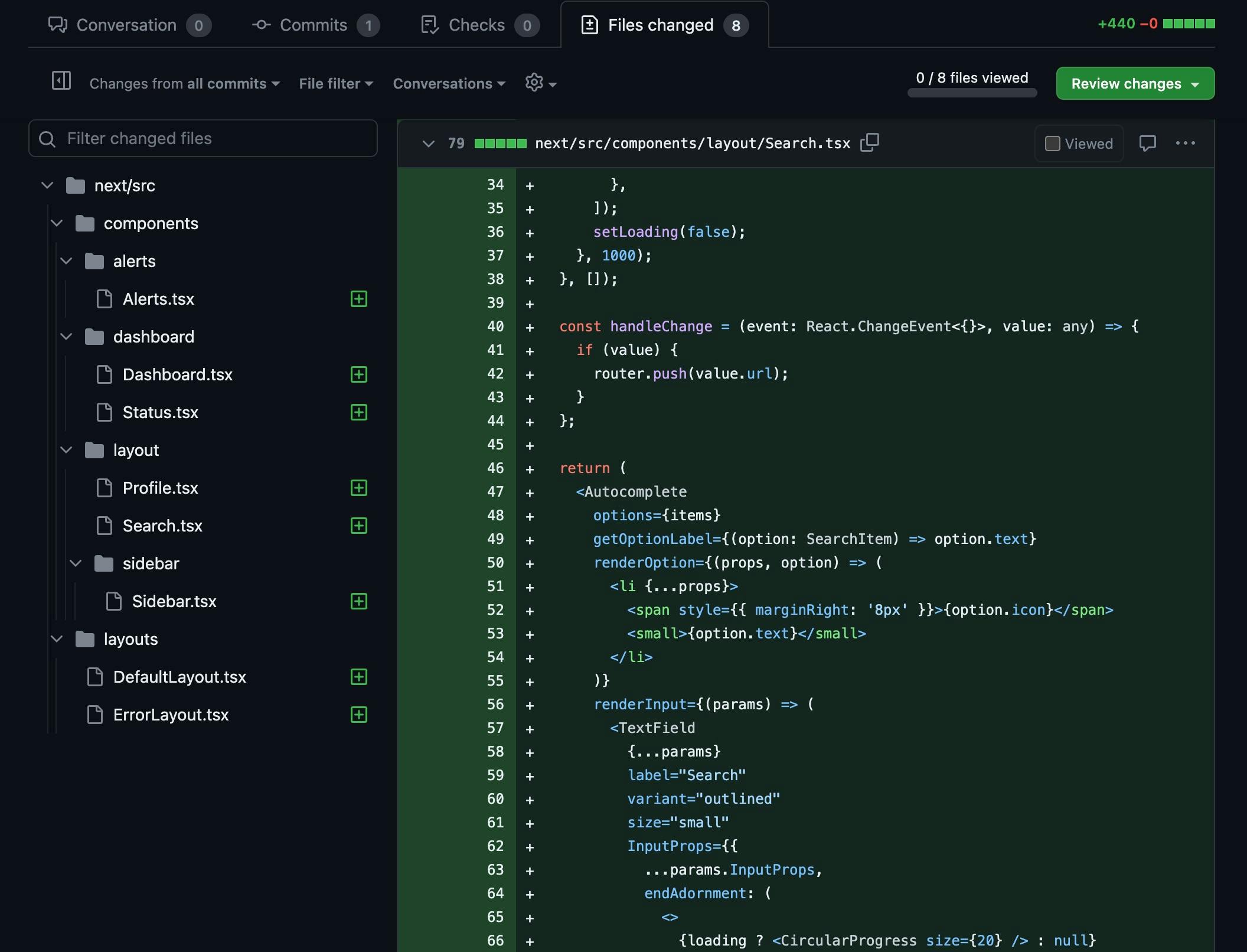

The model runs flawlessly for the first time until it runs out of memory. Before it collapsed, it managed to refactor (i.e., rewrite the code) eight different files from the Vue framework to React. In just a few hours, I've managed to put together an autonomous refactoring robot that reads one type of framework and spits out executable and correct code, including in the right linting format (which is crazy in itself) in another framework. It also chose its own strategy, which is also insane. The result was roughly equivalent to what a junior developer might have accomplished in one to two hours of work. The cost? About $6 for the API calls.

Tuesday was spent in existential anxiety.

I felt like a mad scientist who had created a monster, or who had blown up his own laboratory. It really took a whole day to digest the experience.

Is the end near for us developers?

Not yet. Not at all! GPT-4 has no independence, no drive, and doesn't think critically. At least not unless you ask it to. In blunt terms, you could say it's a very good assistant but a bit naive. If you tell GPT-4 that PostgreSQL indeed counts in Floats, it will blindly believe you. Then it starts hallucinating and guessing another solution, which may not even exist, but still sounds plausible if you're not familiar. It probably leads both of you astray, perhaps for several hours.

A developer with more experience than me in the specific frameworks and database I was working in could have asked critical counter-questions if I had asked for help, which is a vital difference. Your colleagues, however, usually don't appreciate it if you call them on a Friday night at 23:11 and ask questions about calculating percentages. If you work with GPT-4, you should treat it like a smarter RubberDuck (a programming term for explaining your problem to a rubber duck as a means to understand and solve it). You have to provide the critical thinking yourself, then it magically spits out analyses and functioning code. Unless you're unlucky.

Regardless, it feels like the language barriers between different types of programming languages and frameworks have been significantly lowered. In the future, perhaps people won't identify themselves as "Java developers." I think the roles will gradually merge. Instead of programming in a programming language, maybe you'll write instructions in plain English or Swedish? Instead of AI, we want to talk about human + machine, IA, or intelligence augmentation. Developers' skills in broad terms such as technical understanding, logic, and architecture will likely be emphasized more than in narrow terms. Maybe most will even become "technology architects"?

Automating repetitive and "boring" tasks is something I think most people don't mind, and my little experiment proves that it's possible. Autonomous robots that handle outdated code automatically, conduct "peer reviews," provide feedback on code, refactor and improve code, and more, will probably explode soon. And for us developers, it will feel completely normal, just like having a robot vacuum cleaner at home. Sure, simpler versions of these have existed before, but if I can create an autonomous programming robot in a few hours, there's definitely someone out there who can do much more advanced things with the new tools.

One thing is certain; AI models will never be worse than they are right now.